Docker 原理

###What does Docker add to just plain LXC?

Docker is not a replacement for lxc. “lxc” refers to capabilities of the linux kernel (specifically namespaces and control groups) which allow sandboxing processes from one another, and controlling their resource allocations.

On top of this low-level foundation of kernel features, Docker offers a high-level tool with several powerful functionalities:

####Portable deployment across machines. Docker defines a format for bundling an application and all its dependencies into a single object which can be transferred to any docker-enabled machine, and executed there with the guarantee that the execution environment exposed to the application will be the same. Lxc implements process sandboxing, which is an important pre-requisite for portable deployment, but that alone is not enough for portable deployment. If you sent me a copy of your application installed in a custom lxc configuration, it would almost certainly not run on my machine the way it does on yours, because it is tied to your machine’s specific configuration: networking, storage, logging, distro, etc. Docker defines an abstraction for these machine-specific settings, so that the exact same docker container can run - unchanged - on many different machines, with many different configurations.

####Application-centric Docker is optimized for the deployment ofapplications, as opposed to machines. This is reflected in its API, user interface, design philosophy and documentation. By contrast, the lxc helper scripts focus on containers as lightweight machines - basically servers that boot faster and need less ram. We think there’s more to containers than just that.

####Automatic build Docker includes a tool for developers to automatically assemble a container from their source code, with full control over application dependencies, build tools, packaging etc. They are free to use make, maven, chef, puppet, salt, debian packages, rpms, source tarballs, or any combination of the above,regardless of the configuration of the machines.

####Versioning Docker includes git-like capabilities for tracking successive versions of a container, inspecting the diff between versions, committing new versions, rolling back etc. The history also includeshowa container was assembled and by whom, so you get full traceability from the production server all the way back to the upstream developer. Docker also implements incremental uploads and downloads, similar to “git pull”, so new versions of a container can be transferred by only sending diffs.

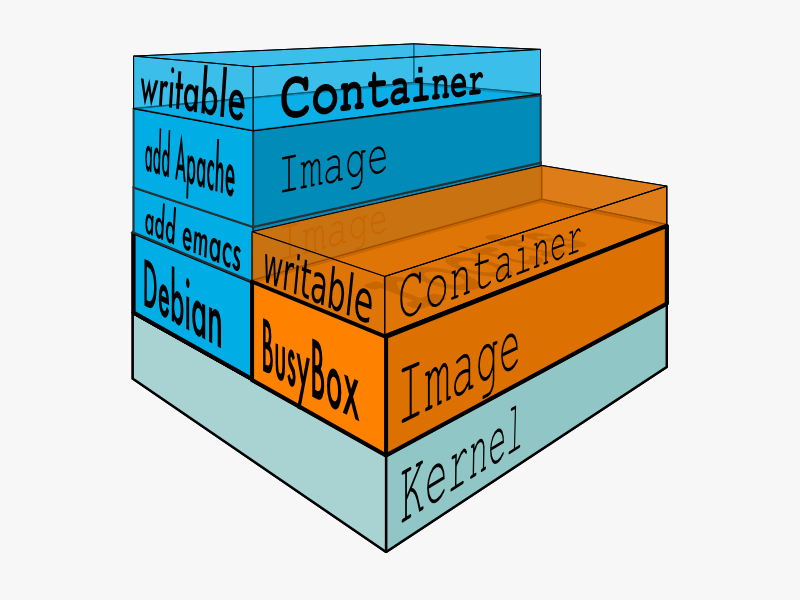

####Component re-use Any container can be used as an “base image” to create more specialized components. This can be done manually or as part of an automated build. For example you can prepare the ideal python environment, and use it as a base for 10 different applications. Your ideal postgresql setup can be re-used for all your future projects. And so on.

####Sharing Docker has access to a public registry (http://index.docker.io) where thousands of people have uploaded useful containers: anything from redis, couchdb, postgres to irc bouncers to rails app servers to hadoop to base images for various distros. The registry also includes an official “standard library” of useful containers maintained by the docker team. The registry itself is open-source, so anyone can deploy their own registry to store and transfer private containers, for internal server deployments for example.

####Tool ecosystem Docker defines an API for automating and customizing the creation and deployment of containers. There are a huge number of tools integrating with docker to extend its capabilities. PaaS-like deployment (Dokku, Deis, Flynn), multi-node orchestration (maestro, salt, mesos, openstack nova), management dashboards (docker-ui, openstack horizon, shipyard), configuration management (chef, puppet), continuous integration (jenkins, strider, travis), etc. Docker is rapidly establishing itself as the standard for container-based tooling.

###Docker component ####File System

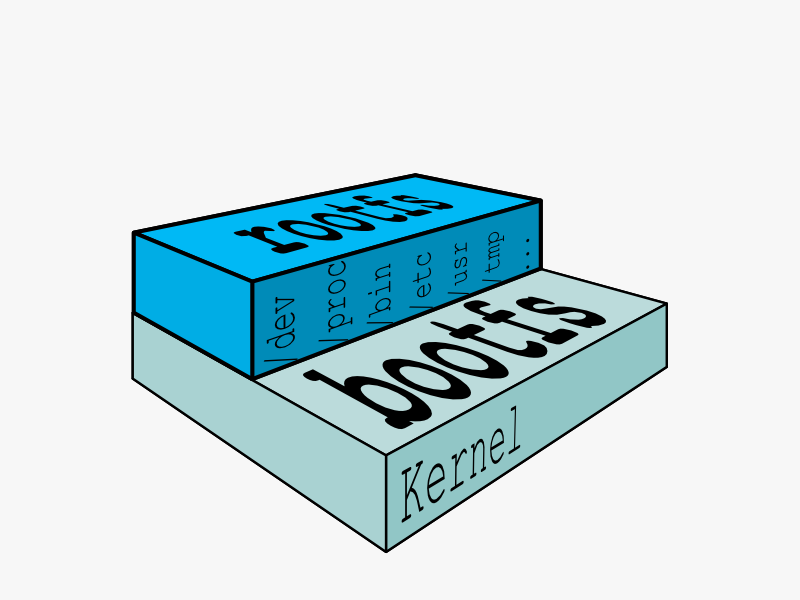

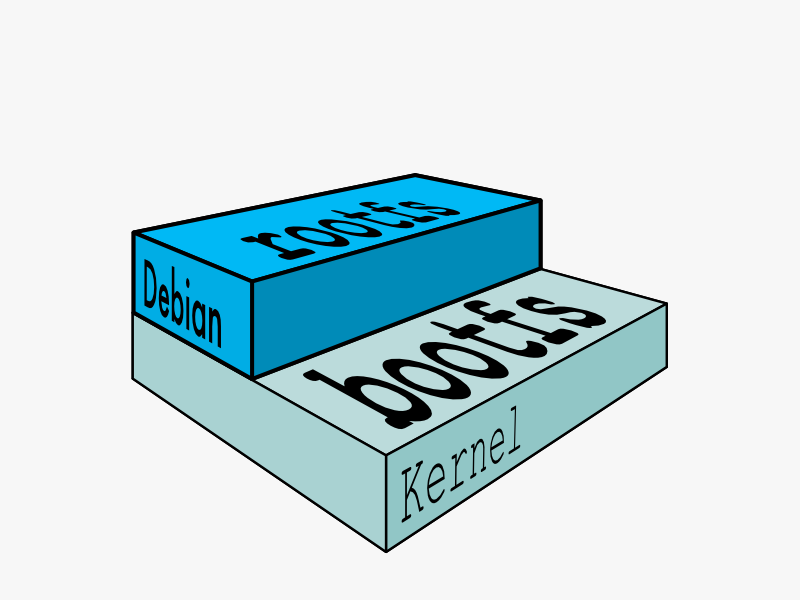

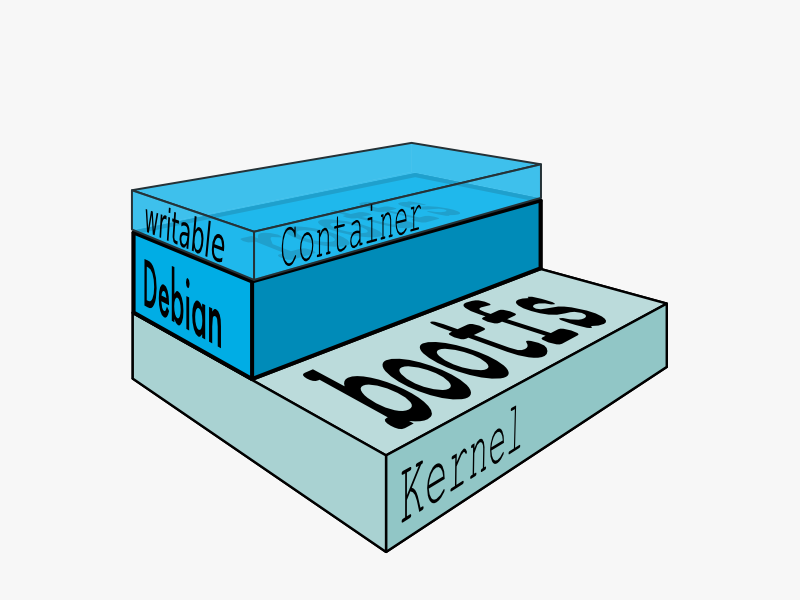

In order for a Linux system to run, it typically needs two file systems:

- boot file system (bootfs)

- root file system (rootfs)

The boot file system contains the bootloader and the kernel. The user never makes any changes to the boot file system. In fact, soon after the boot process is complete, the entire kernel is in memory, and the boot file system is unmounted to free up the RAM associated with the initrd disk image.

The root file system includes the typical directory structure we associate with Unix-like operating systems:/dev, /proc, /bin, /etc, /lib, /usr,and/tmpplus all the configuration files, binaries and libraries required to run user applications (like bash, ls, and so forth).

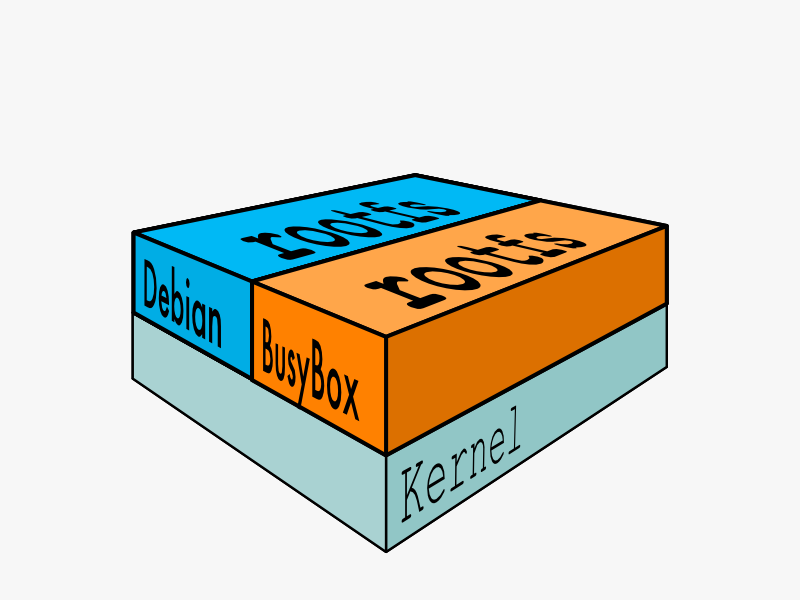

While there can be important kernel differences between different Linux distributions, the contents and organization of the root file system are usually what make your software packages dependent on one distribution versus another. Docker can help solve this problem by running multiple distributions at the same time.

####Layers

In a traditional Linux boot, the kernel first mounts the root File System as read-only, checks its integrity, and then switches the whole rootfs volume to read-write mode.

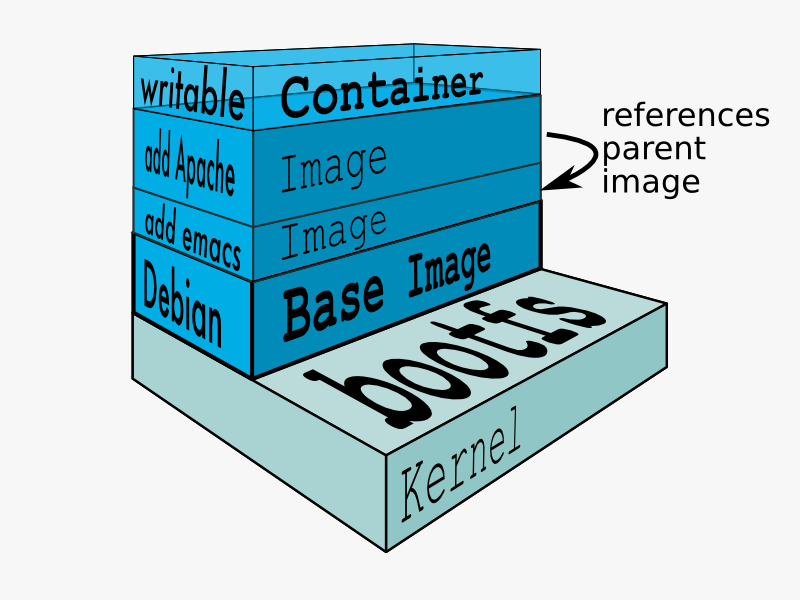

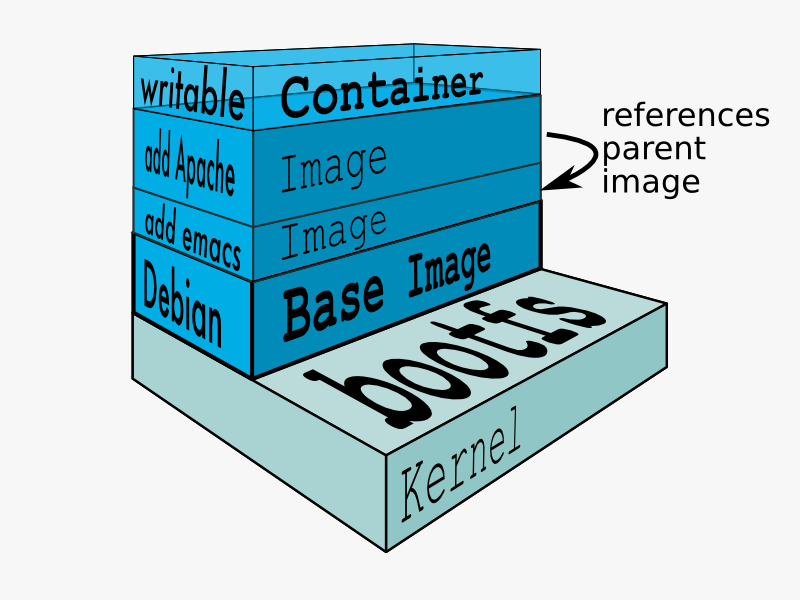

When Docker mounts the rootfs, it starts read-only, as in a traditional Linux boot, but then, instead of changing the file system to read-write mode, it takes advantage of a union mount to add a read-write file system over the read-only file system. In fact there may be multiple read-only file systems stacked on top of each other. We think of each one of these file systems as a layer.

At first, the top read-write layer has nothing in it, but any time a process creates a file, this happens in the top layer. And if something needs to update an existing file in a lower layer, then the file gets copied to the upper layer and changes go into the copy. The version of the file on the lower layer cannot be seen by the applications anymore, but it is there, unchanged.

####Union File System

We call the union of the read-write layer and all the read-only layers a union file system.

####image

In Docker terminology, a read-only Layer is called an image. An image never changes.

Since Docker uses a Union File System, the processes think the whole file system is mounted read-write. But all the changes go to the top-most writeable layer, and underneath, the original file in the read-only image is unchanged. Since images dont change, images do not have state.

Parent Image

Each image may depend on one more image which forms the layer beneath it. We sometimes say that the lower image is the parent of the upper image.

Each image may depend on one more image which forms the layer beneath it. We sometimes say that the lower image is the parent of the upper image.

Base Image

An image that has no parent is a base image.

Image IDs

All images are identified by a 64 hexadecimal digit string (internally a 256bit value). To simplify their use, a short ID of the first 12 characters can be used on the command line. There is a small possibility of short id collisions, so the docker server will always return the long ID.

####Container

Once you start a process in Docker from an Image, Docker fetches the image and its Parent Image, and repeats the process until it reaches the Base Image. Then the Union File System adds a read-write layer on top. That read-write layer, plus the information about its Parent Image and some additional information like its unique id, networking configuration, and resource limits is called acontainer. Container State

Containers can change, and so they have state. A container may be running or exited. When a container is running, the idea of a “container” also includes a tree of processes running on the CPU, isolated from the other processes running on the host.

When the container is exited, the state of the file system and its exit value is preserved. You can start, stop, and restart a container. The processes restart from scratch (their memory state is not preserved in a container), but the file system is just as it was when the container was stopped.

You can promote a container to an Image withdocker commit. Once a container is an image, you can use it as a parent for new containers. Container IDs

All containers are identified by a 64 hexadecimal digit string (internally a 256bit value). To simplify their use, a short ID of the first 12 characters can be used on the command line. There is a small possibility of short id collisions, so the docker server will always return the long ID.

###Tips

删除所有容器

for i in $(sudo docker ps -a | awk '{ print $1}' | sed -n '2,$p') ; do sudo docker rm $i ;done

删除所有为 none 的镜像

for i in $(sudo docker images | grep none | awk '{ print $3}') ; do sudo docker rmi $i ; done

###参考 docker vs LXC